PROFESSIONAL-CLOUD-DEVELOPER Online Practice Questions and Answers

For this question refer to the HipLocal case study.

HipLocal wants to reduce the latency of their services for users in global locations. They have created read replicas of their database in locations where their users reside and configured their service to read traffic using those replicas. How should they further reduce latency for all database interactions with the least amount of effort?

A. Migrate the database to Bigtable and use it to serve all global user traffic.

B. Migrate the database to Cloud Spanner and use it to serve all global user traffic.

C. Migrate the database to Firestore in Datastore mode and use it to serve all global user traffic.

D. Migrate the services to Google Kubernetes Engine and use a load balancer service to better scale the application.

Your organization has recently begun an initiative to replatform their legacy applications onto Google Kubernetes Engine. You need to decompose a monolithic application into microservices. Multiple instances have read and write access to a configuration file, which is stored on a shared file system. You want to minimize the effort required to manage this transition, and you want to avoid rewriting the application code. What should you do?

A. Create a new Cloud Storage bucket, and mount it via FUSE in the container.

B. Create a new persistent disk, and mount the volume as a shared PersistentVolume.

C. Create a new Filestore instance, and mount the volume as an NFS PersistentVolume.

D. Create a new ConfigMap and volumeMount to store the contents of the configuration file.

The new version of your containerized application has been tested and is ready to deploy to production on Google Kubernetes Engine. You were not able to fully load-test the new version in pre-production environments, and you need to make sure that it does not have performance problems once deployed. Your deployment must be automated. What should you do?

A. Use Cloud Load Balancing to slowly ramp up traffic between versions. Use Cloud Monitoring to look for performance issues.

B. Deploy the application via a continuous delivery pipeline using canary deployments. Use Cloud Monitoring to look for performance issues. and ramp up traffic as the metrics support it.

C. Deploy the application via a continuous delivery pipeline using blue/green deployments. Use Cloud Monitoring to look for performance issues, and launch fully when the metrics support it.

D. Deploy the application using kubectl and set the spec.updateStrategv.type to RollingUpdate. Use Cloud Monitoring to look for performance issues, and run the kubectl rollback command if there are any issues.

Your company has created an application that uploads a report to a Cloud Storage bucket. When the report is uploaded to the bucket, you want to publish a message to a Cloud Pub/Sub topic. You want to implement a solution that will take a small amount to effort to implement. What should you do?

A. Configure the Cloud Storage bucket to trigger Cloud Pub/Sub notifications when objects are modified.

B. Create an App Engine application to receive the file; when it is received, publish a message to the Cloud Pub/Sub topic.

C. Create a Cloud Function that is triggered by the Cloud Storage bucket. In the Cloud Function, publish a message to the Cloud Pub/Sub topic.

D. Create an application deployed in a Google Kubernetes Engine cluster to receive the file; when it is received, publish a message to the Cloud Pub/Sub topic.

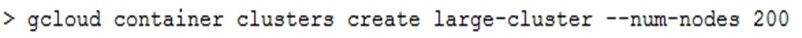

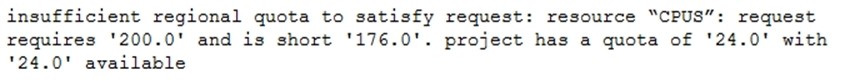

You are creating a Google Kubernetes Engine (GKE) cluster and run this command:

The command fails with the error:

You want to resolve the issue. What should you do?

A. Request additional GKE quota is the GCP Console.

B. Request additional Compute Engine quota in the GCP Console.

C. Open a support case to request additional GKE quotA.

D. Decouple services in the cluster, and rewrite new clusters to function with fewer cores.

You are configuring a continuous integration pipeline using Cloud Build to automate the deployment of new container images to Google Kubernetes Engine (GKE). The pipeline builds the application from its source code, runs unit and integration tests in separate steps, and pushes the container to Container Registry. The application runs on a Python web server.

The Dockerfile is as follows: FROM python:3.7-alpine COPY . /app WORKDIR /app RUN pip install -r requirements.txt CMD [ "gunicorn", "-w 4", "main:app" ] You notice that Cloud Build runs are taking longer than expected to complete. You want to decrease the build time. What should you do? (Choose two.)

A. Select a virtual machine (VM) size with higher CPU for Cloud Build runs.

B. Deploy a Container Registry on a Compute Engine VM in a VPC, and use it to store the final images.

C. Cache the Docker image for subsequent builds using the -- cache-from argument in your build config file.

D. Change the base image in the Dockerfile to ubuntu:latest, and install Python 3.7 using a package manager utility.

E. Store application source code on Cloud Storage, and configure the pipeline to use gsutil to download the source code.

You are porting an existing Apache/MySQL/PHP application stack from a single machine to Google Kubernetes Engine. You need to determine how to containerize the application. Your approach should follow Google-recommended best practices for availability. What should you do?

A. Package each component in a separate container. Implement readiness and liveness probes.

B. Package the application in a single container. Use a process management tool to manage each component.

C. Package each component in a separate container. Use a script to orchestrate the launch of the components.

D. Package the application in a single container. Use a bash script as an entrypoint to the container, and then spawn each component as a background job.

Your company has a BigQuery data mart that provides analytics information to hundreds of employees. One user of wants to run jobs without interrupting important workloads. This user isn't concerned about the time it takes to run these jobs. You want to fulfill this request while minimizing cost to the company and the effort required on your part. What should you do?

A. Ask the user to run the jobs as batch jobs.

B. Create a separate project for the user to run jobs.

C. Add the user as a job.user role in the existing project.

D. Allow the user to run jobs when important workloads are not running.

You have a web application that publishes messages to Pub/Sub. You plan to build new versions of the application locally and need to quickly test Pub/Sub integration tor each new build. How should you configure local testing?

A. Run the gclcud config set api_endpoint_overrides/pubsub https: / 'pubsubemulator.googleapi3.com. coin/ command to change the Pub/Sub endpoint prior to starting the application

B. In the Google Cloud console, navigate to the API Library and enable the Pub/Sub API When developing locally, configure your application to call pubsub.googleapis com

C. Install Cloud Code on the integrated development environment (IDE) Navigate to Cloud APIs, and enable Pub/Sub against a valid Google Project ID. When developing locally, configure your application to call pubsub.googleapis com

D. Install the Pub/Sub emulator using gcloud and start the emulator with a valid Google Project ID. When developing locally, configure your application to use the local emulator by exporting the fuhsub emulator Host variable

Your code is running on Cloud Functions in project A. It is supposed to write an object in a Cloud Storage

bucket owned by project B. However, the write call is failing with the error "403 Forbidden".

What should you do to correct the problem?

A. Grant your user account the roles/storage.objectCreator role for the Cloud Storage bucket.

B. Grant your user account the roles/iam.serviceAccountUser role for the service- PROJECTA@gcf-adminrobot. iam.gserviceaccount.com service account.

C. Grant the [email protected] service account the roles/ storage.objectCreator role for the Cloud Storage bucket.

D. Enable the Cloud Storage API in project B.