DATABRICKS-MACHINE-LEARNING-PROFESSIONAL Online Practice Questions and Answers

After a data scientist noticed that a column was missing from a production feature set stored as a Delta table, the machine learning engineering team has been tasked with determining when the column was dropped from the feature set. Which of the following SQL commands can be used to accomplish this task?

A. VERSION

B. DESCRIBE

C. HISTORY

D. DESCRIBE HISTORY

E. TIMESTAMP

A data scientist has developed a scikit-learn random forest model model, but they have not yet logged model with MLflow. They want to obtain the input schema and the output schema of the model so they can document what type of data is

expected as input.

Which of the following MLflow operations can be used to perform this task?

A. mlflow.models.schema.infer_schema

B. mlflow.models.signature.infer_signature

C. mlflow.models.Model.get_input_schema

D. mlflow.models.Model.signature

E. There is no way to obtain the input schema and the output schema of an unlogged model.

Which of the following deployment paradigms can centrally compute predictions for a single record with exceedingly fast results?

A. Streaming

B. Batch

C. Edge/on-device

D. None of these strategies will accomplish the task.

E. Real-time

A machine learning engineering team has written predictions computed in a batch job to a Delta table for querying. However, the team has noticed that the querying is running slowly. The team has already tuned the size of the data files. Upon

investigating, the team has concluded that the rows meeting the query condition are sparsely located throughout each of the data files.

Based on the scenario, which of the following optimization techniques could speed up the query by colocating similar records while considering values in multiple columns?

A. Z-Ordering

B. Bin-packing

C. Write as a Parquet file

D. Data skipping

E. Tuning the file size

Which of the following describes the purpose of the context parameter in the predict method of Python models for MLflow?

A. The context parameter allows the user to specify which version of the registered MLflow Model should be used based on the given application's current scenario

B. The context parameter allows the user to document the performance of a model after it has been deployed

C. The context parameter allows the user to include relevant details of the business case to allow downstream users to understand the purpose of the model

D. The context parameter allows the user to provide the model with completely custom if-else logic for the given application's current scenario

E. The context parameter allows the user to provide the model access to objects like preprocessing models or custom configuration files

A machine learning engineer wants to programmatically create a new Databricks Job whose schedule depends on the result of some automated tests in a machine learning pipeline. Which of the following Databricks tools can be used to programmatically create the Job?

A. MLflow APIs

B. AutoML APIs

C. MLflow Client

D. Jobs cannot be created programmatically

E. Databricks REST APIs

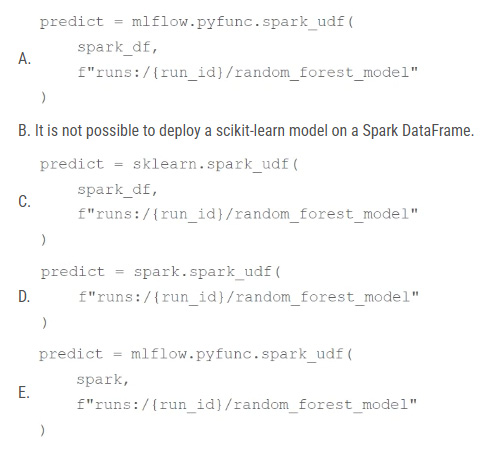

A machine learning engineer has developed a random forest model using scikit-learn, logged the model using MLflow as random_forest_model, and stored its run ID in the run_id Python variable. They now want to deploy that model by performing batch inference on a Spark DataFrame spark_df.

Which of the following code blocks can they use to create a function called predict that they can use to complete the task?

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

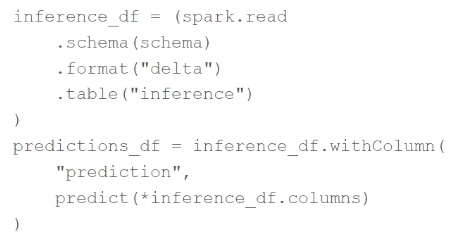

A machine learning engineer is using the following code block as part of a batch deployment pipeline:

Which of the following changes needs to be made so this code block will work when the inference table is a stream source?

A. Replace "inference" with the path to the location of the Delta table

B. Replace schema(schema) with option("maxFilesPerTrigger", 1)

C. Replace spark.read with spark.readStream

D. Replace format("delta") with format("stream")

E. Replace predict with a stream-friendly prediction function

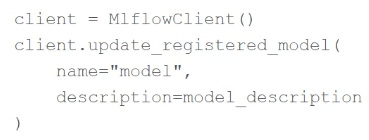

A machine learning engineering manager has asked all of the engineers on their team to add text descriptions to each of the model projects in the MLflow Model Registry. They are starting with the model project "model" and they'd like to add

the text in the model_description variable.

The team is using the following line of code:

Which of the following changes does the team need to make to the above code block to accomplish the task?

A. Replace update_registered_model with update_model_version

B. There no changes necessary

C. Replace description with artifact

D. Replace client.update_registered_model with mlflow

E. Add a Python model as an argument to update_registered_model

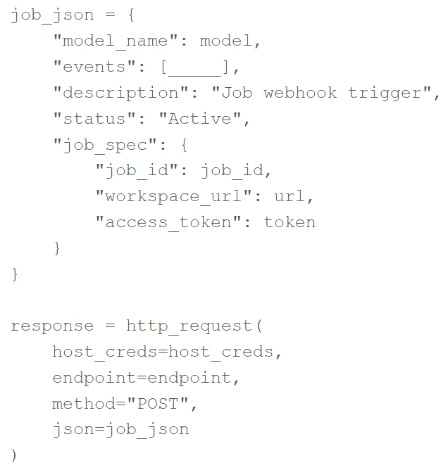

A machine learning engineer is attempting to create a webhook that will trigger a Databricks Job job_id when a model version for model model transitions into any MLflow Model Registry stage. They have the following incomplete code block:

Which of the following lines of code can be used to fill in the blank so that the code block accomplishes the task?

A. "MODEL_VERSION_CREATED"

B. "MODEL_VERSION_TRANSITIONED_TO_PRODUCTION"

C. "MODEL_VERSION_TRANSITIONED_TO_STAGING"

D. "MODEL_VERSION_TRANSITIONED_STAGE"

E. "MODEL_VERSION_TRANSITIONED_TO_STAGING", "MODEL_VERSION_TRANSITIONED_TO_PRODUCTION"