ARA-C01 Online Practice Questions and Answers

An Architect has chosen to separate their Snowflake Production and QA environments using two separate Snowflake accounts.

The QA account is intended to run and test changes on data and database objects before pushing those changes to the Production account. It is a requirement that all database objects and data in the QA account need to be an exact copy of the database objects, including privileges and data in the Production account on at least a nightly basis.

Which is the LEAST complex approach to use to populate the QA account with the Production account's data and database objects on a nightly basis?

A. 1) Create a share in the Production account for each database 2) Share access to the QA account as a Consumer 3) The QA account creates a database directly from each share 4) Create clones of those databases on a nightly basis 5) Run tests directly on those cloned databases

B. 1) Create a stage in the Production account 2) Create a stage in the QA account that points to the same external object-storage location 3) Create a task that runs nightly to unload each table in the Production account into the stage 4) Use Snowpipe to populate the QA account

C. 1) Enable replication for each database in the Production account 2) Create replica databases in the QA account 3) Create clones of the replica databases on a nightly basis 4) Run tests directly on those cloned databases

D. 1) In the Production account, create an external function that connects into the QA account and returns all the data for one specific table 2) Run the external function as part of a stored procedure that loops through each table in the Production account and populates each table in the QA account

A company has several sites in different regions from which the company wants to ingest data.

Which of the following will enable this type of data ingestion?

A. The company must have a Snowflake account in each cloud region to be able to ingest data to that account.

B. The company must replicate data between Snowflake accounts.

C. The company should provision a reader account to each site and ingest the data through the reader accounts.

D. The company should use a storage integration for the external stage.

An Architect on a new project has been asked to design an architecture that meets Snowflake security, compliance, and governance requirements as follows:

1) Use Tri-Secret Secure in Snowflake

2) Share some information stored in a view with another Snowflake customer

3) Hide portions of sensitive information from some columns

4) Use zero-copy cloning to refresh the non-production environment from the production environment

To meet these requirements, which design elements must be implemented? (Choose three.)

A. Define row access policies.

B. Use theBusiness-Criticaledition of Snowflake.

C. Create a secure view.

D. Use the Enterprise edition of Snowflake.

E. Use Dynamic Data Masking.

F. Create a materialized view.

A healthcare company is deploying a Snowflake account that may include Personal Health Information (PHI). The company must ensure compliance with all relevant privacy standards.

Which best practice recommendations will meet data protection and compliance requirements? (Choose three.)

A. Use, at minimum, the Business Critical edition of Snowflake.

B. Create Dynamic Data Masking policies and apply them to columns that contain PHI.

C. Use the Internal Tokenization feature to obfuscate sensitive data.

D. Use the External Tokenization feature to obfuscate sensitive data.

E. Rewrite SQL queries to eliminate projections of PHI data based on current_role().

F. Avoid sharing data with partner organizations.

What is a characteristic of loading data into Snowflake using the Snowflake Connector for Kafka?

A. The Connector only works in Snowflake regions that use AWS infrastructure.

B. The Connector works with all file formats, including text, JSON, Avro, Ore, Parquet, and XML.

C. The Connector creates and manages its own stage, file format, and pipe objects.

D. Loads using the Connector will have lower latency than Snowpipe and will ingest data in real time.

The Data Engineering team at a large manufacturing company needs to engineer data coming from many sources to support a wide variety of use cases and data consumer requirements which include:

1) Finance and Vendor Management team members who require reporting and visualization

2) Data Science team members who require access to raw data for ML model development

3) Sales team members who require engineered and protected data for data monetization

What Snowflake data modeling approaches will meet these requirements? (Choose two.)

A. Consolidate data in the company's data lake and use EXTERNAL TABLES.

B. Create a raw database for landing and persisting raw data entering the data pipelines.

C. Create a set of profile-specific databases that aligns data with usage patterns.

D. Create a single star schema in a single database to support all consumers' requirements.

E. Create a Data Vault as the sole data pipeline endpoint and have all consumers directly access the Vault.

An Architect is designing a pipeline to stream event data into Snowflake using the Snowflake Kafka connector. The Architect's highest priority is to configure the connector to stream data in the MOST cost-effective manner.

Which of the following is recommended for optimizing the cost associated with the Snowflake Kafka connector?

A. Utilize a higher Buffer.flush.time in the connector configuration.

B. Utilize a higher Buffer.size.bytes in the connector configuration.

C. Utilize a lower Buffer.size.bytes in the connector configuration.

D. Utilize a lower Buffer.count.records in the connector configuration.

How can an Architect enable optimal clustering to enhance performance for different access paths on a given table?

A. Create multiple clustering keys for a table.

B. Create multiple materialized views with different cluster keys.

C. Create super projections that will automatically create clustering.

D. Create a clustering key that contains all columns used in the access paths.

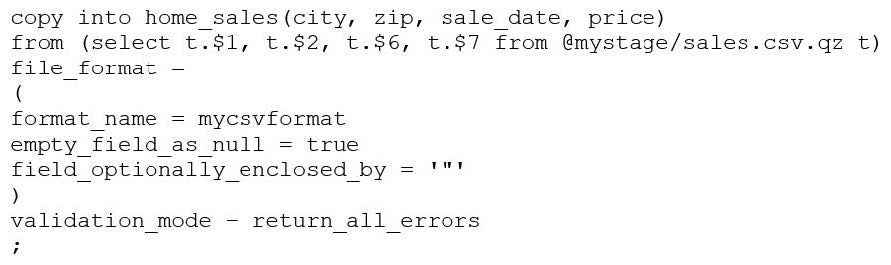

Consider the following COPY command which is loading data with CSV format into a Snowflake table from an internal stage through a data transformation query.

This command results in the following error:

SQL compilation error: invalid parameter 'validation_mode'

Assuming the syntax is correct, what is the cause of this error?

A. The VALIDATION_MODE parameter supports COPY statements that load data from external stages only.

B. The VALIDATION_MODE parameter does not support COPY statements with CSV file formats.

C. The VALIDATION_MODE parameter does not support COPY statements that transform data during a load.

D. The value return_all_errors of the option VALIDATION_MODE is causing a compilation error.